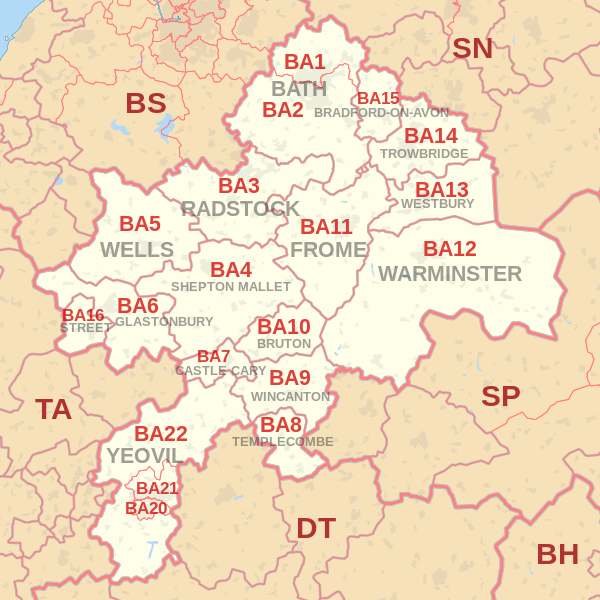

Postcodes in Bath are unlikely to score highly (via Wikimedia)

tl;dr

I used the R package {data.table} to find the highest- and lowest-scoring UK postcodes based on the sum of their numbers and letters (A = 1, B = 2, etc). You can jump to the results.

The premise

Yesterday I noticed that the hashtag #PostcodePandemonium was trending on Twitter.1 The premise was to sum the numbers and letters in your postcode, where the letters have been converted to their position in the alphabet (i.e. A = 1, B = 2, etc). Highest value ‘wins’.

Which existing postcode has the highest score? And the lowest?

Process

Attach packages

I’ve been using Matt Dowle and Arun Srinivasan’s lightning-fast {data.table} package recently and wanted to use it here to handle millions of UK postcodes. I’ve prioritised for readability in this post rather than efficiency, but let me know how to improve things.

library(data.table) # a better data.frame

library(stringr) # simple string handling

library(tictoc) # timingI’m using Sergei Izrailev’s {tictoc} package to time the processes throughout.

Get the data

The latest postcode data (February 2020) is available on the Open Geography Portal by the Office for National Statistics. From there you can download a zipped folder that contains the file we want, NSPL_FEB_2020_UK.csv.

You can download the .zip to a temporary location on your machine and then unzip() it inside {data.table}’s fread() for a rapid read.

# Path to the zipped file

zip_path <-

"https://www.arcgis.com/sharing/rest/content/items/1951e70c3cc3483c9e643902d858355b/data"

# Generate an empty temporary file

temp <- tempfile()

# Download the zipped folder to the temporary location

download.file(zip_path, temp)

# Read the CSV file in the Data subfolder of the unzipped folder

tic("CSV read complete")

pcodes_dt <- fread(unzip(temp, files = "Data/NSPL_FEB_2020_UK.csv"))

toc()

# Delete the temporary location

unlink(temp)And we can check the dimensions of this object.

# Rows and columns in the data set

dim(pcodes_dt)## [1] 2640516 41So there’s more than 2.5 million rows. Some postcodes have, however, been terminated over time. We’ll need to filter for the postcodes that are still active (thanks to Robert Kaleta for pointing this out).

We can also simplify to just the postcode column that we want using {data.table}‘s .() notation. Data in the pcds column has the consistent form of letter, letter, digit, space, digit, letter, letter (e.g. ’AB12 3CD’), which makes them relatively easy to deal with.

# Filter for empty date of termination (doterm)

# Retain only the postcode column

pcodes_dt <- pcodes_dt[is.na(doterm), .(pcds)]

# Preview

head(pcodes_dt)## pcds

## 1: AB10 1AB

## 2: AB10 1AF

## 3: AB10 1AG

## 4: AB10 1AH

## 5: AB10 1AL

## 6: AB10 1AN# Count rows

nrow(pcodes_dt)## [1] 1766067You can see that this removes a large number of terminated postcodes.

Extract

Now to extract the numbers and letters so that ‘AB12 3CD’ is broken into A, B, 12, 3, C and D, for example. Note that we want to extract multi-digit numbers if they exist within each half (the ‘outward’ and ‘inward’ parts) of the postcode, so 12 rather than 1 and 2, and 12 and 3 rather than 123.

The walrus operator (:=) is used here as a function to create new columns and assign names to them. I’ve chose to use {stringr}’s str_extract_all() function to match the strings we want. The regular expression contains values in the curly braces to indicate the desired character lengths to be matched.

This will produce two list-columns: one with the letters extracted into it and one with the numbers.

# Extract letters into one list column and numbers into another

pcodes_dt[, `:=`(letter = str_extract_all(pcds, "[:alpha:]{1}"),

number = str_extract_all(pcds, "[:digit:]{1,2}"))]

pcodes_dt## pcds letter number

## 1: AB10 1AB A,B,A,B 10,1

## 2: AB10 1AF A,B,A,F 10,1

## 3: AB10 1AG A,B,A,G 10,1

## 4: AB10 1AH A,B,A,H 10,1

## 5: AB10 1AL A,B,A,L 10,1

## ---

## 1766063: ZE3 9JU Z,E,J,U 3,9

## 1766064: ZE3 9JW Z,E,J,W 3,9

## 1766065: ZE3 9JX Z,E,J,X 3,9

## 1766066: ZE3 9JY Z,E,J,Y 3,9

## 1766067: ZE3 9JZ Z,E,J,Z 3,9Remember that {data.table} edits in place, so the pcodes_dt object will be updated and without the need to overwrite it (i.e. no need to do something like pcodes_dt <- pcodes_dt[<whatever>]).

Numbers and letters

Now to work with the number list-column. The values are currently character-class because they were extracted from the postcode strings; they need to be made numeric before they can be summed. lapply() is used here to pass the function as.numeric() to achieve this.

tic("Make numbers numeric class")

pcodes_dt[, number := lapply(number, as.numeric)]

toc()## Make numbers numeric class: 10.305 sec elapsedAnd now to work with the letter list column. The custom function in lapply() first turns the letters into the factor class, where the full set of possible levels is provided by the LETTERS vector, and then uses as.numeric() to convert each factor level to its corresponding numeric value.

This works on the principle that as.numeric(factor(c("A", "B", "C"))) becomes c(1, 2, 3). The first factor level, A gets converted to 1, B to 2 and so on.

tic("Convert letters to numbers, make numeric class")

pcodes_dt[, letter_number := lapply(

letter, function(x) as.numeric(factor(x, levels = LETTERS)))]

toc()## Convert letters to numbers, make numeric class: 31.133 sec elapsedScores

Now to separately sum the number and letter values in each row of the list-columns and add them together for the final score.

# Generate summation columns for letters and numbers separately

pcodes_dt[, `:=`(number_sum = lapply(number, sum),

letter_sum = lapply(letter_number, sum))]

# Make the sum columns numeric- rather than list-class

pcodes_dt$number_sum <- as.numeric(pcodes_dt$number_sum)

pcodes_dt$letter_sum <- as.numeric(pcodes_dt$letter_sum)

# Sum the number and letter values

pcodes_dt[, score := number_sum + letter_sum]

# The first few scores

head(pcodes_dt[, .(pcds, number_sum, letter_sum, score)])## pcds number_sum letter_sum score

## 1: AB10 1AB 11 6 17

## 2: AB10 1AF 11 10 21

## 3: AB10 1AG 11 11 22

## 4: AB10 1AH 11 12 23

## 5: AB10 1AL 11 16 27

## 6: AB10 1AN 11 18 29So you can see, for example, that AB10 1AB has a number sum of 11 (10 + 1) and a letter sum of 6 (a couple of As and Bs, so 1 + 2 + 1 + 2), totalling 17.

Results

Now to order the results, focus on the postcodes and scores alone, and preview the top and bottom scores (provided by default in {data.table}’s print method).

# Select cols and reorder by score

pcodes_dt[order(-score), .(pcds, score)]## pcds score

## 1: WV99 1ZZ 197

## 2: SS99 9YY 196

## 3: WV98 1ZZ 196

## 4: WV99 1YZ 196

## 5: WV99 1ZY 196

## ---

## 1766063: E1 0AA 8

## 1766064: B1 1BA 7

## 1766065: B1 2AA 7

## 1766066: BA1 1AA 7

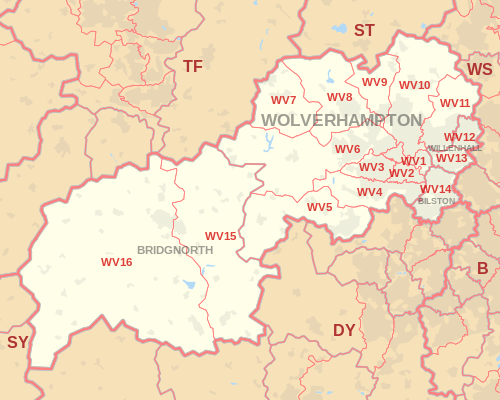

## 1766067: BA2 0AA 7So the top-scoring postcode was WV99 1ZZ with 197 points. It’s on an industrial estate in Telford, north-east of Birmingham. You can view it on Google Maps.

The lowest scoring postcodes were in Birmingham (Holloway Circus at B1 1BA and Arena Birmingham at B1 2AA) and Bath (near Bath Spa train station at BA1 1AA and south of Farmborough at BA2 0AA). They scored only 7.

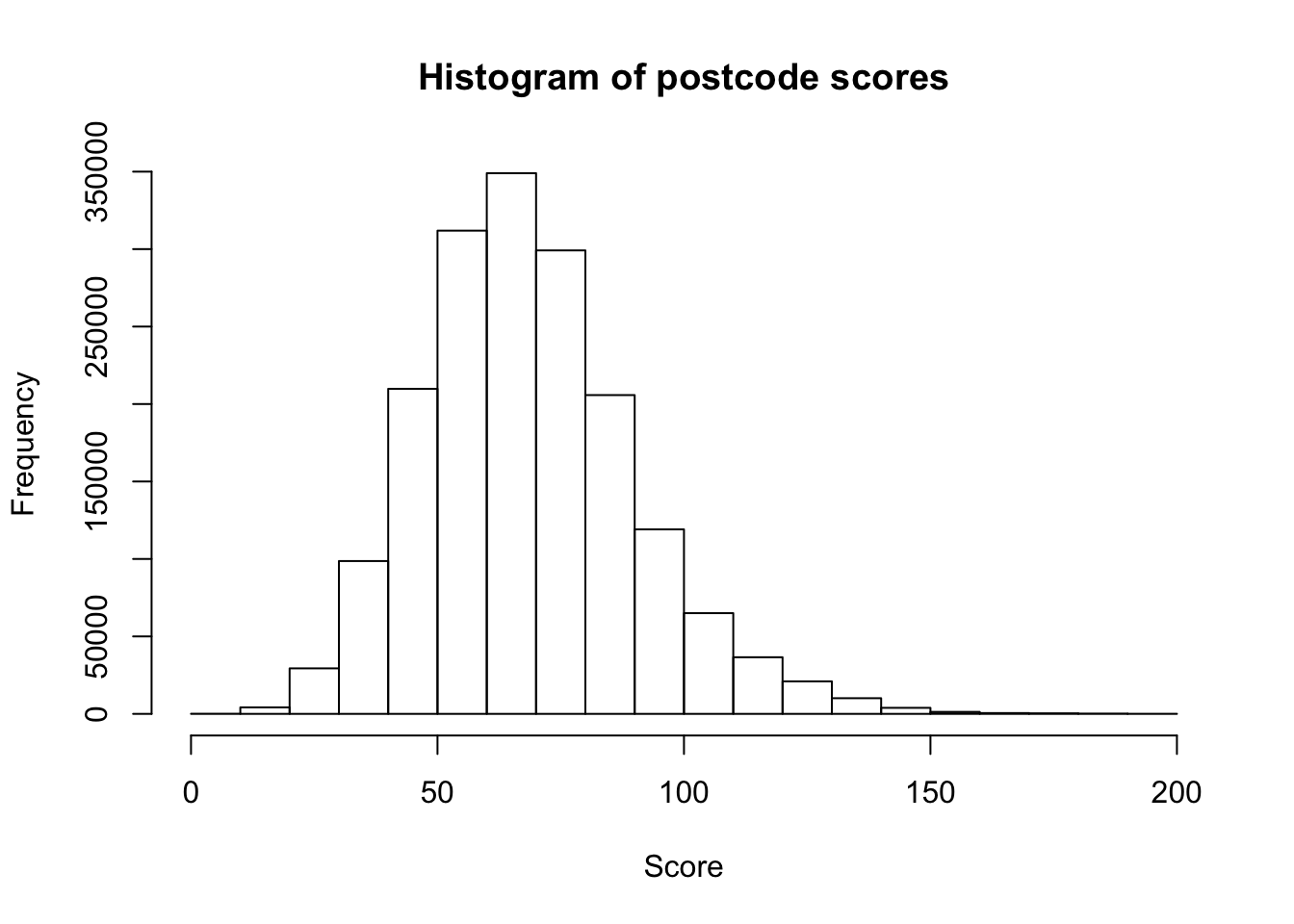

The distribution of scores looks like this:

hist(

pcodes_dt$score,

xlab = "Score",

main = "Histogram of postcode scores"

)

It’s slightly skewed, with nearly 350,000 instances of scores between 60 and 70 and very few scores over 150.

Let’s check out the summary statistics.

summary(pcodes_dt$score)## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 7.00 54.00 67.00 68.87 81.00 197.00So the mean score is just under 70.

How does your score compare?

‘WV’ provides 23 + 22 = 45 points in itself (via Wikimedia)

Session info

## ─ Session info ───────────────────────────────────────────────────────────────

## setting value

## version R version 3.6.0 (2019-04-26)

## os macOS Mojave 10.14.6

## system x86_64, darwin15.6.0

## ui X11

## language (EN)

## collate en_GB.UTF-8

## ctype en_GB.UTF-8

## tz Europe/London

## date 2020-05-17

##

## ─ Packages ───────────────────────────────────────────────────────────────────

## package * version date lib source

## assertthat 0.2.1 2019-03-21 [1] CRAN (R 3.6.0)

## blogdown 0.12 2019-05-01 [1] CRAN (R 3.6.0)

## bookdown 0.10 2019-05-10 [1] CRAN (R 3.6.0)

## cli 2.0.1 2020-01-08 [1] CRAN (R 3.6.0)

## crayon 1.3.4 2017-09-16 [1] CRAN (R 3.6.0)

## data.table * 1.12.6 2019-10-18 [1] CRAN (R 3.6.0)

## digest 0.6.23 2019-11-23 [1] CRAN (R 3.6.0)

## evaluate 0.14 2019-05-28 [1] CRAN (R 3.6.0)

## fansi 0.4.1 2020-01-08 [1] CRAN (R 3.6.0)

## glue 1.3.1 2019-03-12 [1] CRAN (R 3.6.0)

## htmltools 0.4.0 2019-10-04 [1] CRAN (R 3.6.0)

## knitr 1.26 2019-11-12 [1] CRAN (R 3.6.0)

## magrittr 1.5 2014-11-22 [1] CRAN (R 3.6.0)

## Rcpp 1.0.3 2019-11-08 [1] CRAN (R 3.6.0)

## rlang 0.4.4 2020-01-28 [1] CRAN (R 3.6.0)

## rmarkdown 2.0 2019-12-12 [1] CRAN (R 3.6.0)

## sessioninfo 1.1.1 2018-11-05 [1] CRAN (R 3.6.0)

## stringi 1.4.5 2020-01-11 [1] CRAN (R 3.6.0)

## stringr * 1.4.0 2019-02-10 [1] CRAN (R 3.6.0)

## tictoc * 1.0 2014-06-17 [1] CRAN (R 3.6.0)

## withr 2.1.2 2018-03-15 [1] CRAN (R 3.6.0)

## xfun 0.11 2019-11-12 [1] CRAN (R 3.6.0)

## yaml 2.2.1 2020-02-01 [1] CRAN (R 3.6.0)

##

## [1] /Library/Frameworks/R.framework/Versions/3.6/Resources/libraryIt originated from the social media team at a company controlled by one of the largest corporations in the world, so I don’t think it’s cynical to say that the whole thing was a marketing ploy.↩